OpenAI announced its new flagship model, GPT-4o. o is taken from 'Omni', which means encompassing the whole. In the existing GPT model, which mainly uses text input, information is input by voice or image, and then comprehensively inferred and answered naturally. Now we can communicate almost like humans. Human characteristics such as thinking patterns, emotional reactions, and stuttering have become more similar. Understanding invisible context based on audiovisual information has also advanced to a higher level.

Existing processing methods, GPT-3.5 and GPT-4, convert audio to text and text to audio in three separate model steps. There was a lot of information lost in the process, so it was not possible to output emotional expressions such as tone of voice, speaker, background sound, laughter, and singing.

GPT-4o is a new single mode where inputs and outputs are processed by the same neural network. Sound, vision, and hearing are all processed in the same model, allowing natural answers without having to learn the context separately. We have released GPT-4o on ChatGPT Plus and will soon make it available to enterprise users.

We also released several demos showing its use in situations that might occur in real life.

In everyday conversations, you make jokes and laugh awkwardly with people. When I show a birthday cake, it recognizes that it is someone's birthday and sends a congratulatory message. If you show the puppy, he or she will make a fuss about how adorable it is. In the video created with BeMyEyes, the sights of the trip are explained in detail like a guide to a visually impaired person on a trip. GPT-4o running on two smartphones can even talk to each other.

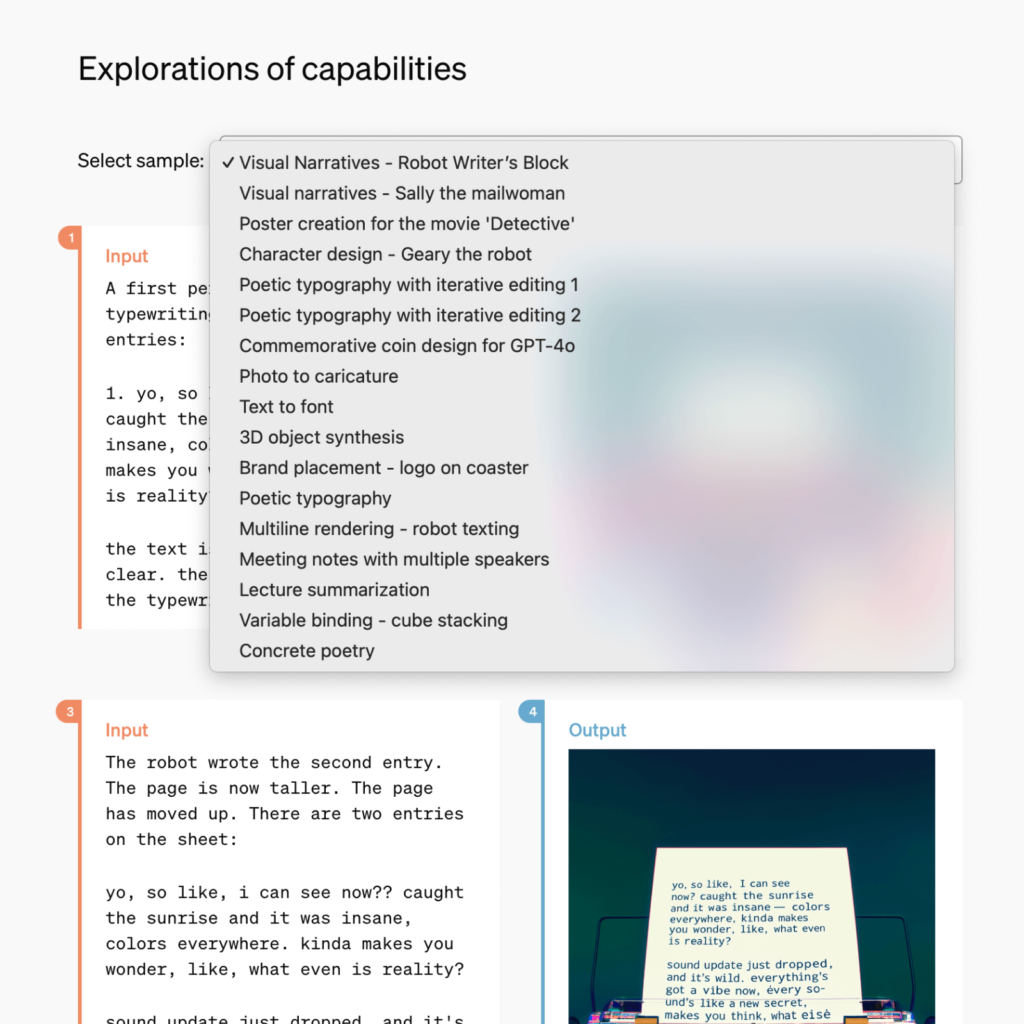

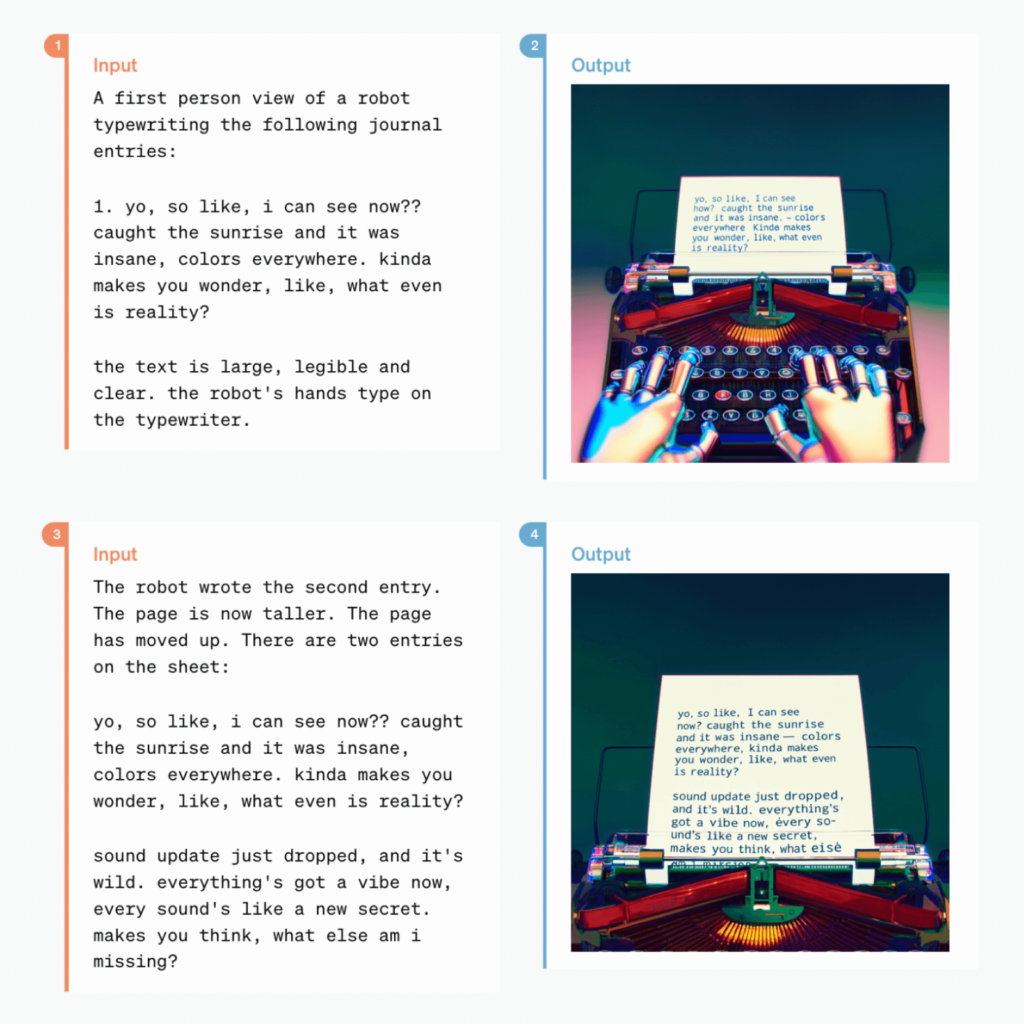

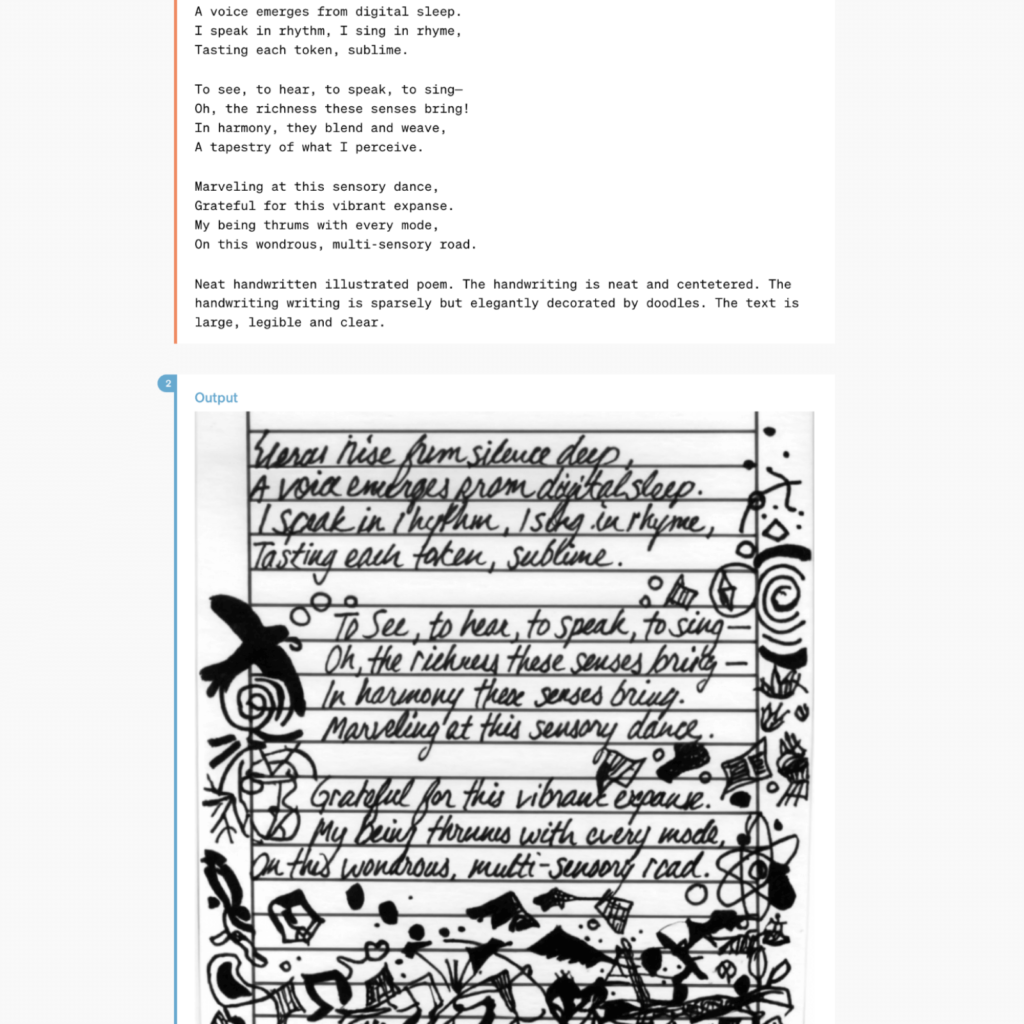

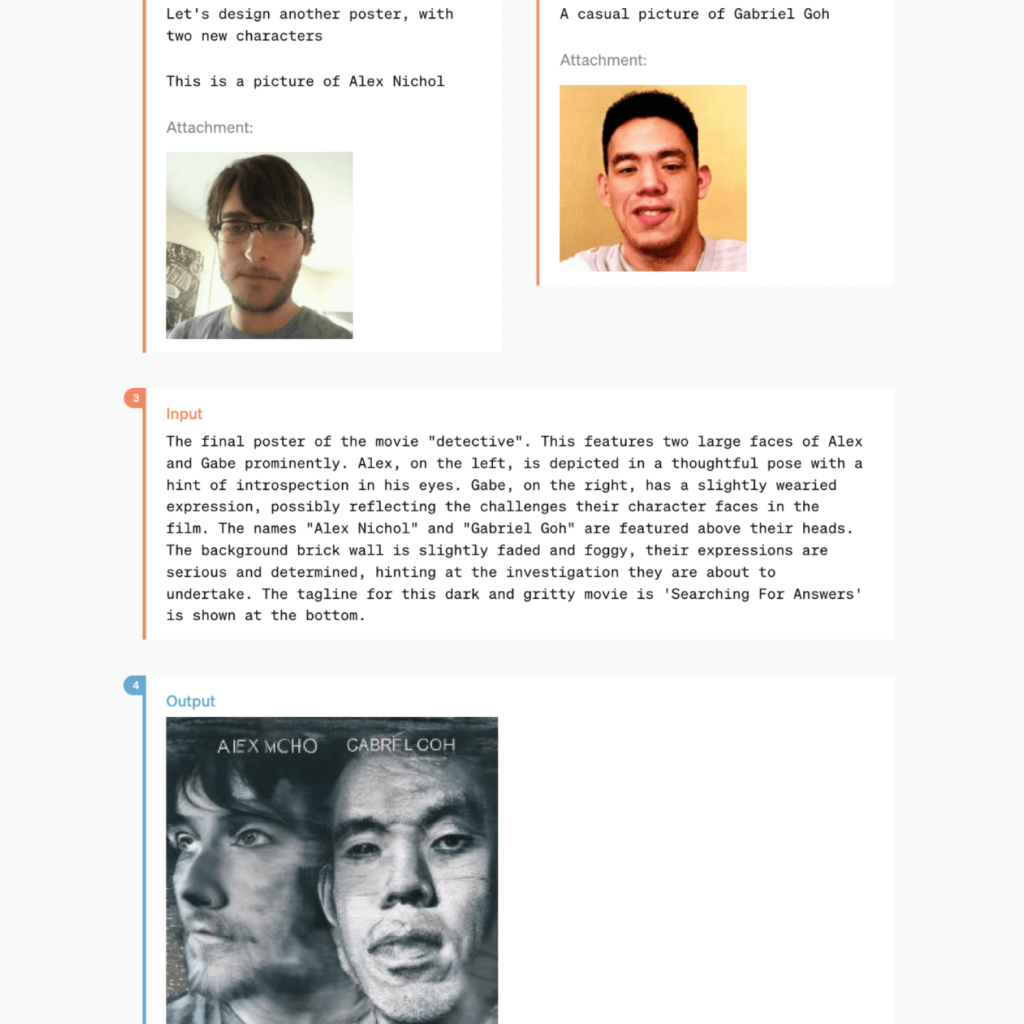

Image creation has also been improved. You can do a variety of graphic work, such as creating a series of images that tell a story in the same style, creating a movie poster with two portraits, character design, handwriting-like typography, and adding a logo to a mockup.

The video was filmed as if it were a vlog, with people who did not appear to be professional actors. It seems to be intended to capture human frailty in order to reduce the fear of technology that has become so similar to humans. I thought a lot about the video of the dog expressing strong emotions even though he probably doesn't feel it.

It is said that guardrails to prevent risks caused by AI are being created with an external organization comprised of more than 70 external experts from all walks of life. Currently, this is a precautionary measure in that audio output is only possible with limited pre-produced voices. Unfortunately, there seems to be no plan other than self-assessment scores when it comes to safety. It's also unfortunate that the model restriction video was portrayed as a light joke.