The University of Chicago has unveiled its project Nightshade, an AI defense tool for artists. It takes the previously released tool Glaze one step further and provides greater coverage with better quality. While Glaze is an effect applied to individual images to prevent the AI model from recognizing the image normally, Nightshade is a tool that teaches the AI model to recognize images differently from humans, creating illusions even when interpreting new images. If an artist wanted to prevent their work from being used for learning, they had to rely entirely on the company that created the AI model, but now a means of actively protecting themselves has emerged.

Ben Zhao, a computer science professor who led the project, called Nightshade "like putting hot sauce in your lunch to keep it from being stolen from the refrigerator at work." They also stated that it is not a tool to destroy AI models, but rather a device to ensure fair payment to artists.

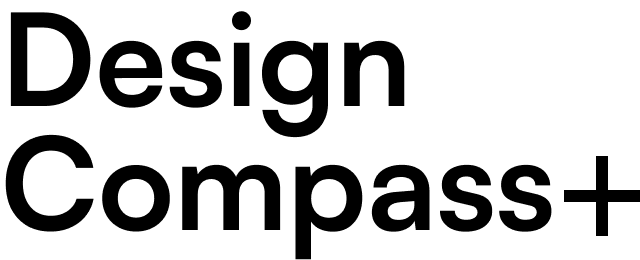

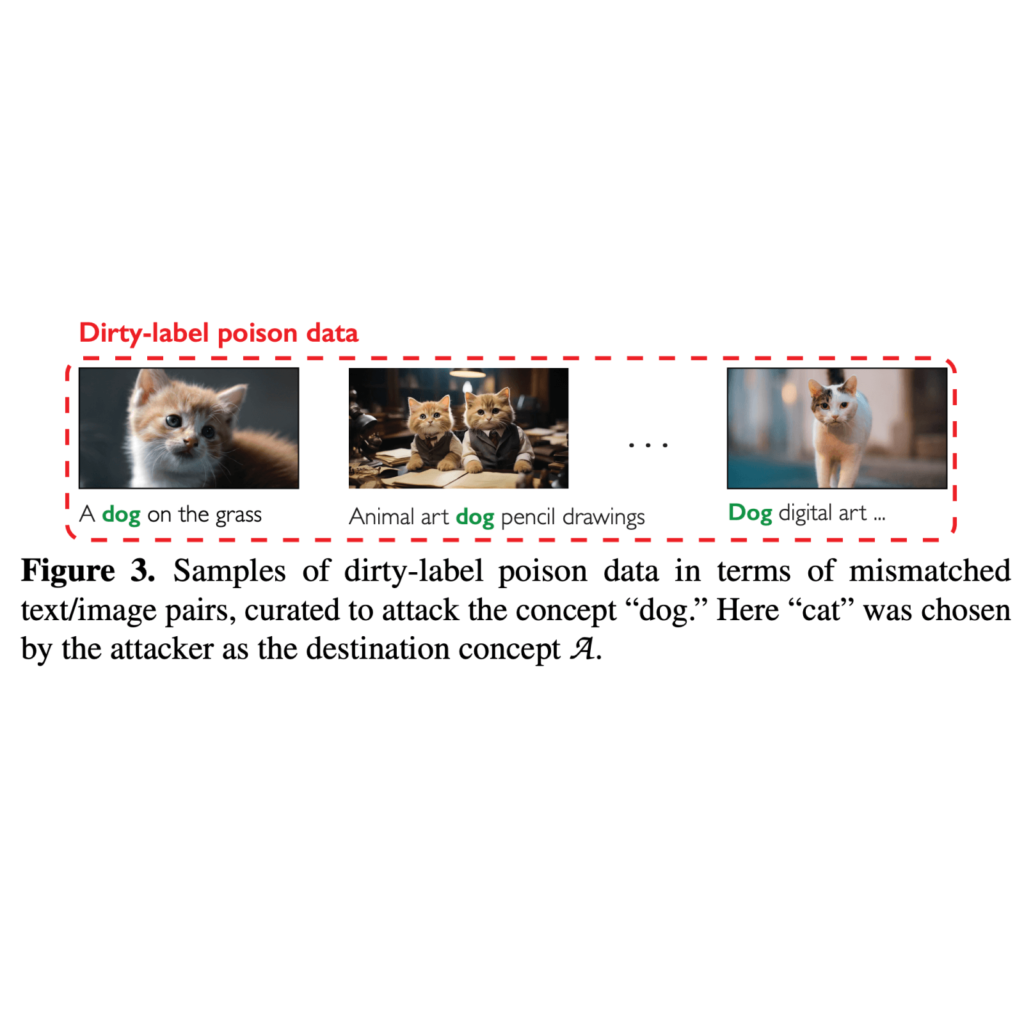

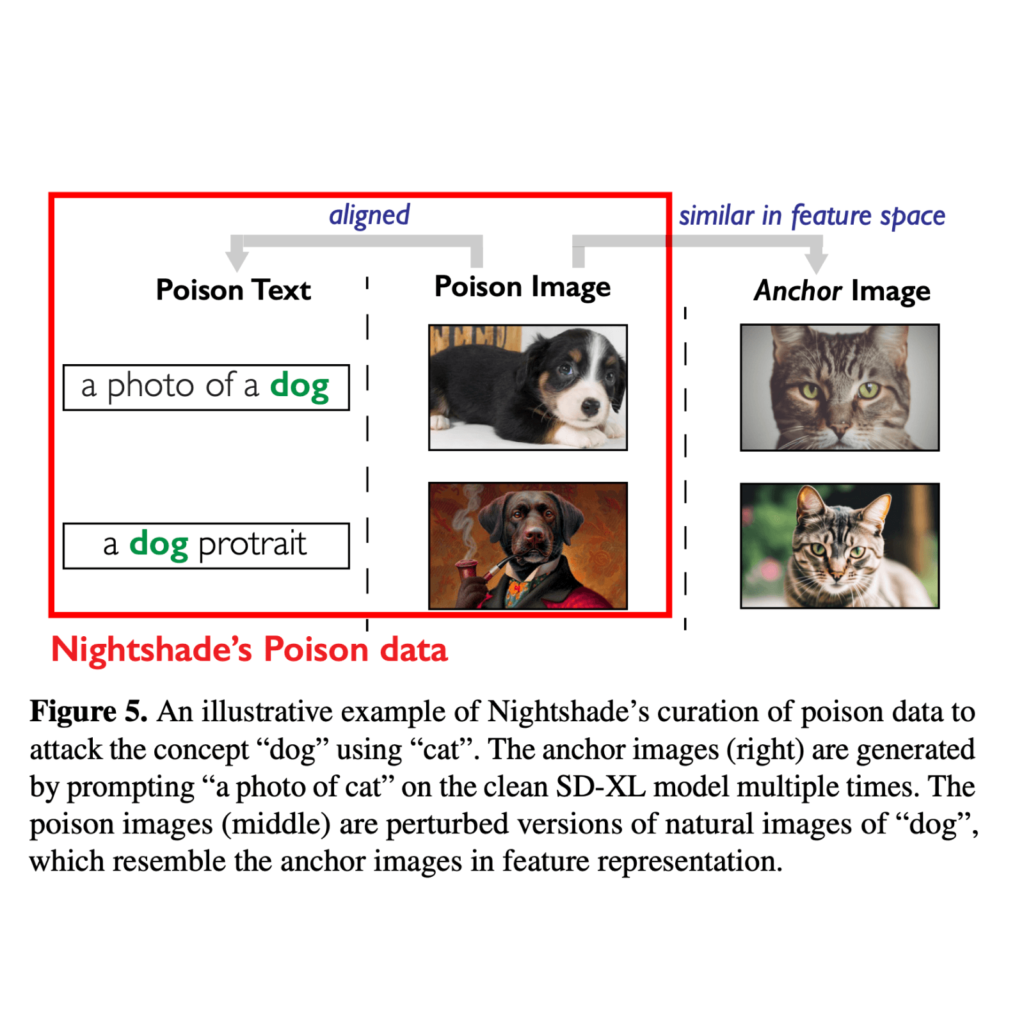

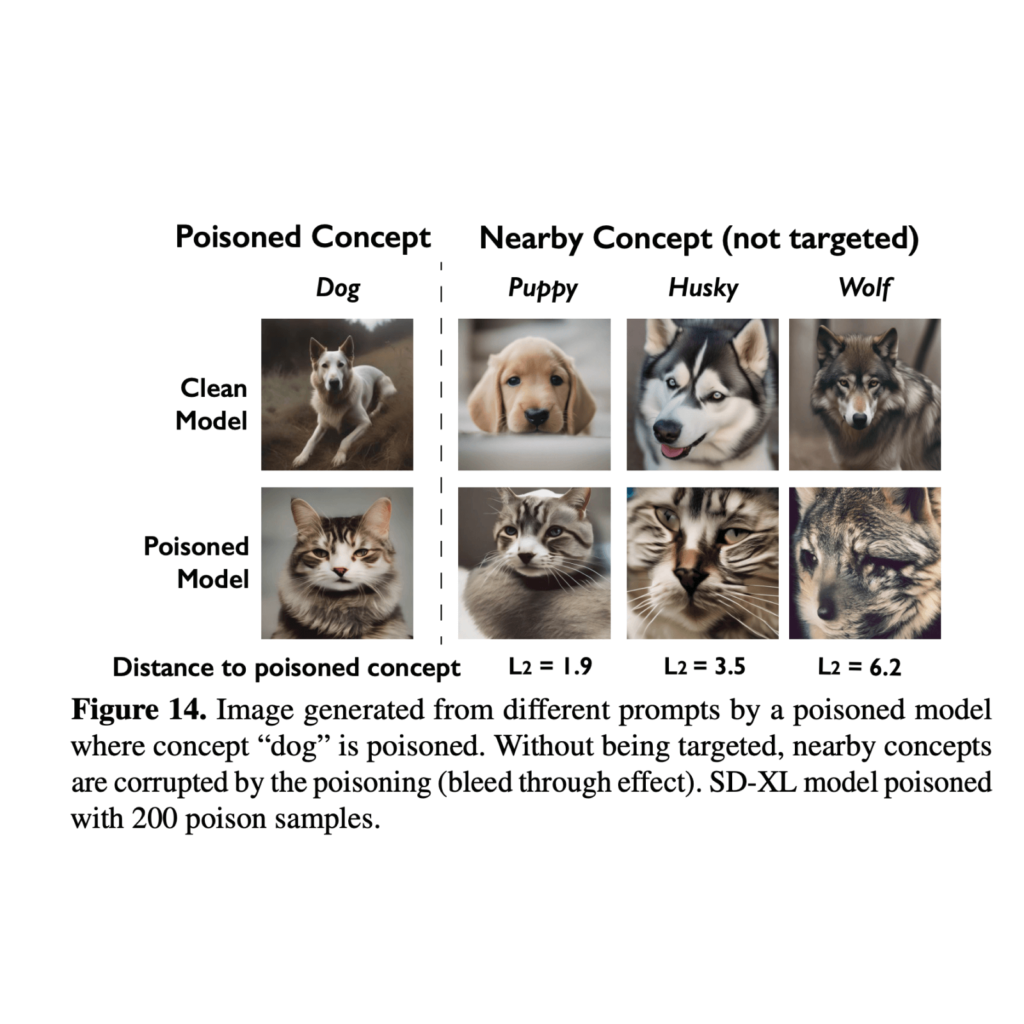

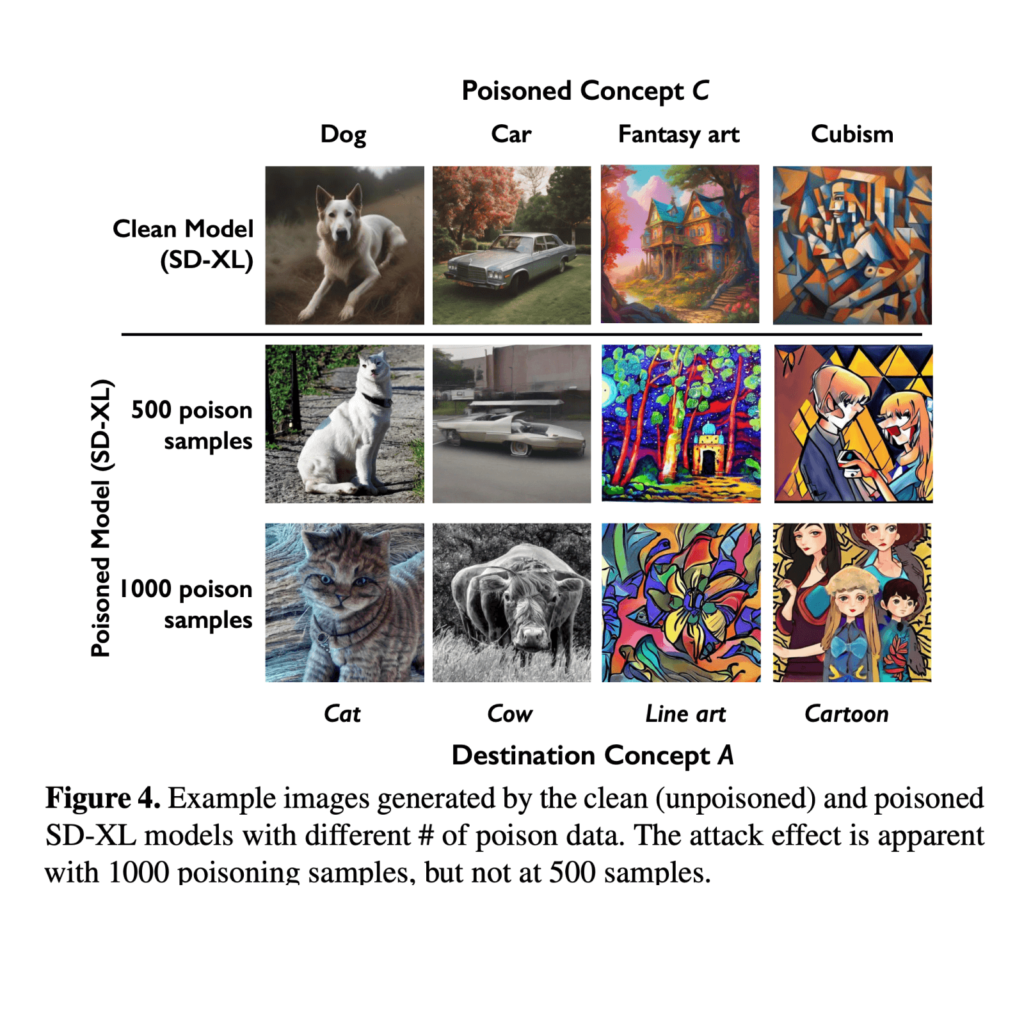

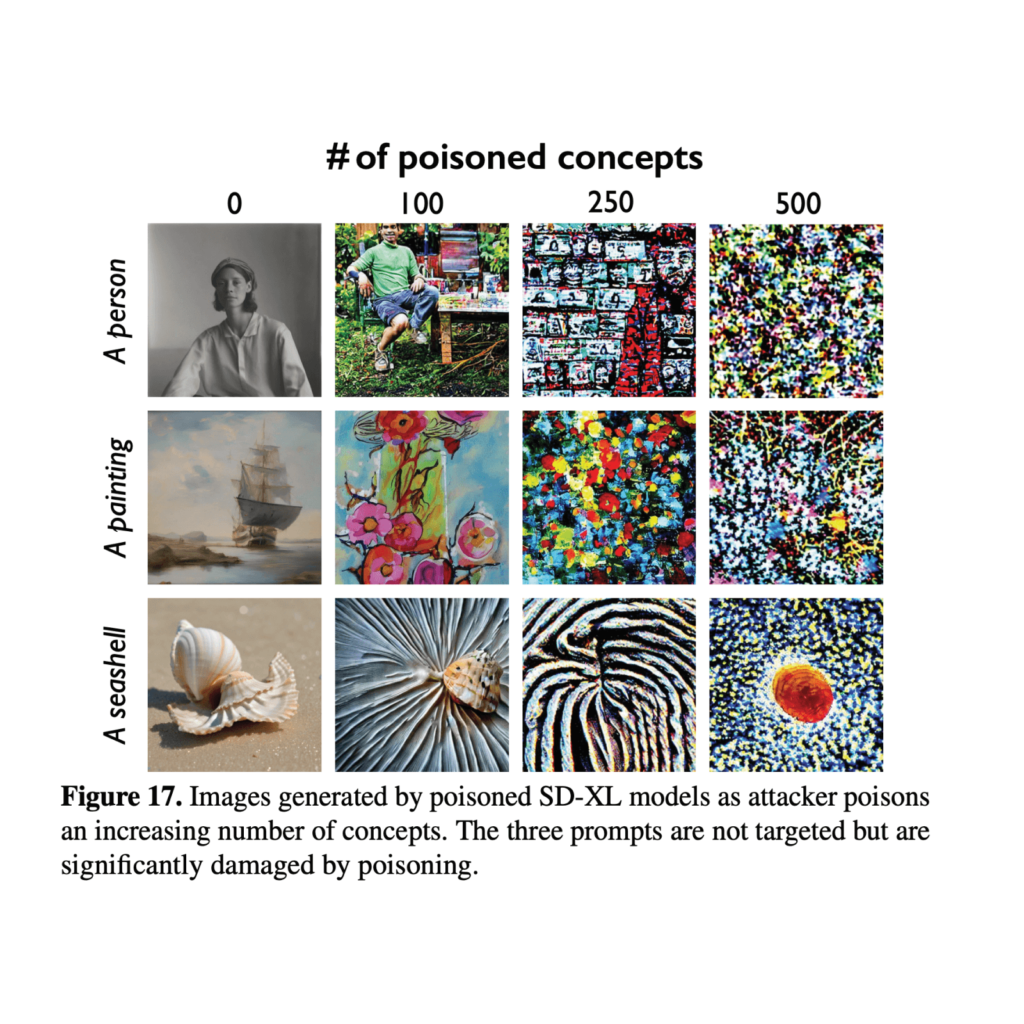

While the human eye sees a shaded image that is largely unchanged from the original, an AI model perceives it as a completely different composition. It mainly focuses on creating images using text, which causes the AI model to learn the text incorrectly. For example, although it appears to be an image of a cat, it hides a 'dog' that the human eye cannot identify, causing the AI model to interpret 'cat' as 'dog'. Not only objects, but also certain graphic styles are mistrained in the same way, creating unintended results. This kind of learning causes not only specific images, but also the AI model itself to completely misunderstand ‘cat’.

Even if you crop, compress, or add noise to an image with Nightshde applied, its original functionality is maintained. The shading effect will remain intact whether you take a screenshot or an image displayed on your monitor. No watermarks or hidden messages, not easy to break.

Glaze, an AI model learning defense tool previously released by the research team, is a tool used by individual artists to prevent style imitation, while Nightshade is a tool that collectively attacks AI models to prevent them from producing normal output without consent. Through this, other artists' works can also be protected. The research team revealed that they were able to prevent unauthorized AI models by using both Glaze and Nightshade in their work. Currently, the two tools operate independently, but they said they will soon release a tool that can be used simultaneously.

Recently, AI has begun to take over tasks previously performed only by humans in various areas. It has become a tool that can further expand human potential as it can produce the same results while consuming less time and resources. However, behind the scenes, a problem arose in the area of ‘uniqueness’.

The results created by humans are not unique solely because they are difficult to create. It's not like it wasn't infringing because you can't create a logo or imitate a unique interior. At the extreme, I think it would be like counterfeiting one country's currency in another country and mass-producing it.

Recently, the phenomenon of people stealing brand or design assets from other companies and bragging about their creations has been on the rise. It is unfortunate that the only reason for existence is growth in size and profits. In the future, I hope that more people will express their creativity without restrictions, rather than just trying to steal from others, and that there will be more rich expressions and stories than ever before.