Google has unveiled its large-scale AI Gemini. Bard, which was released in response to ChatGPT, was disappointing due to its poor performance, but it has returned with powerful performance. Unlike other AIs that focus on implementation, we shared an amazing experience in 'inference'.

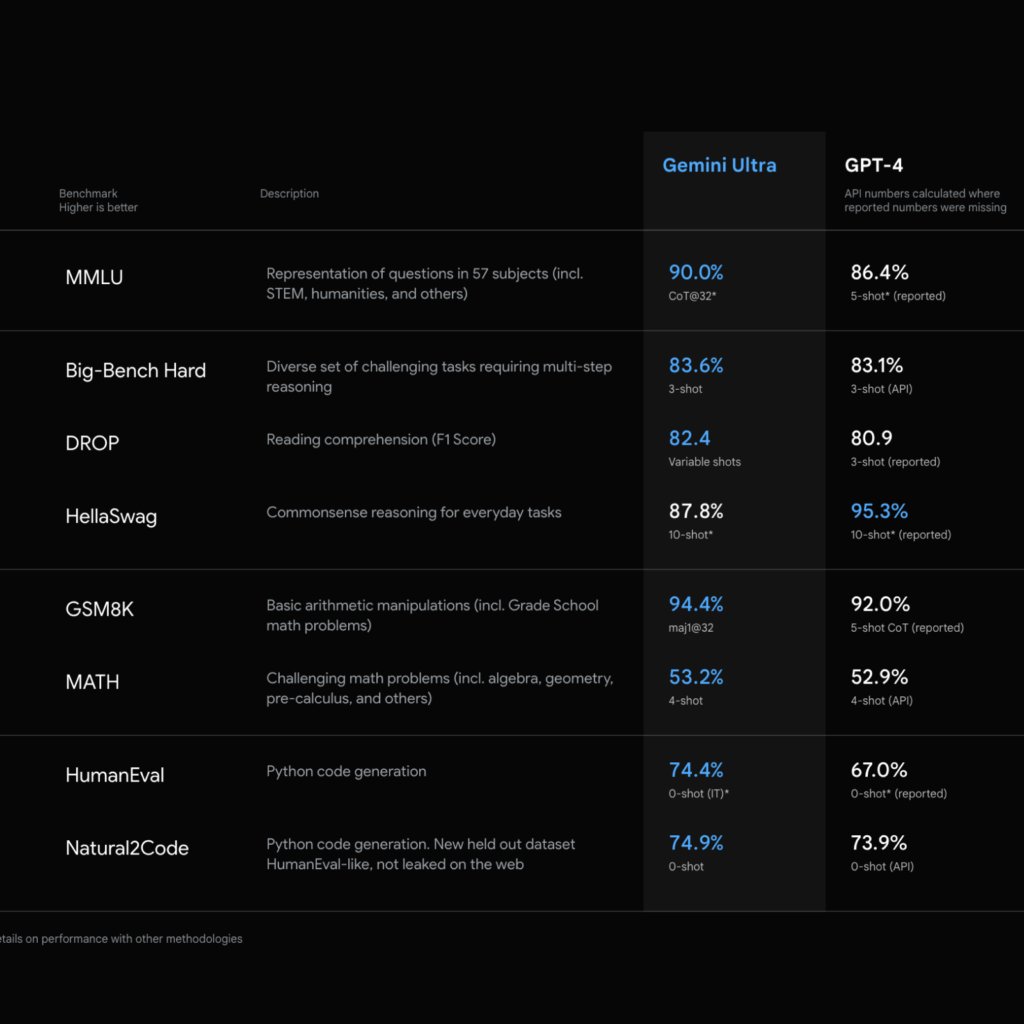

Gemini Ultra received a score of 90.0% in MMLU (Measuring Massive Multitask Language Understanding), which tests a combination of 57 subjects including mathematics, physics, history, science, medicine, and ethics, surpassing the human expert's score of 89.8%. Although it is a benchmark that measures performance, the results are surprising.

Gemini is text and image agnostic. Able to perceive and make inferences from various types of information. It can also interpret information such as musical notes that people abstractly use to promise meaning. It is literally a model that can do anything to anything.

The example video released by Google is as natural as an AI assistant that you have only seen in movies. It can understand and communicate with hand-drawn drawings like a human, drawing conclusions through more complex reasoning. In a game where you guess the country based on several clues, you can understand which country it is by pointing your finger on the world map. Watch a video of a person imitating a scene from The Matrix and guess which movie they were imitating. Complex inferences are also possible that can only be interpreted by knowing the cultural context.

Not only are they capable of understanding and thinking like humans, but they are also capable of establishing rules for machines. You can write code in popular programming languages such as Python, Java, and C++. It shows excellent performance in benchmarks such as HumanEval, which measures coding performance.

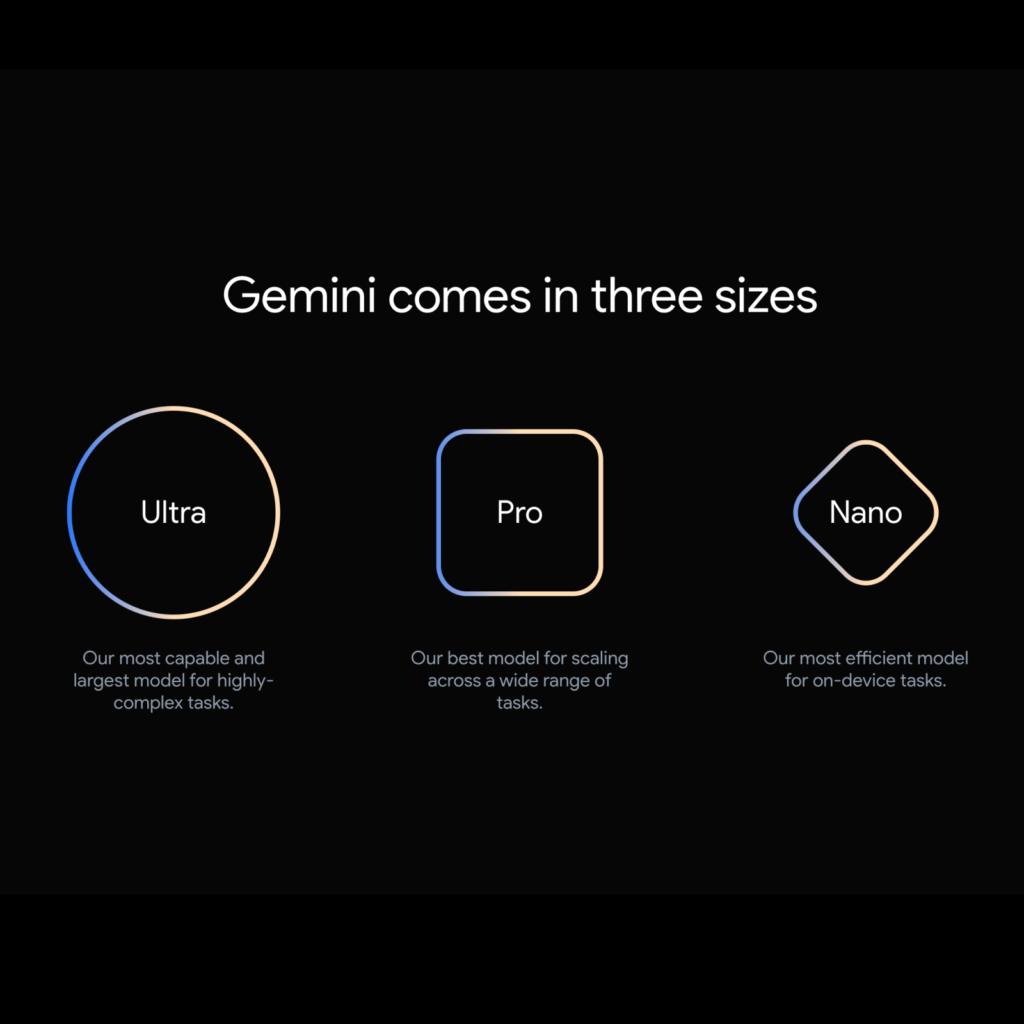

Gemini 1.0 offers three models: Gemini Ultra, Gemini Pro, and Gemini Nano. It will be provided in accordance with performance and scale and will be applied to Google's services and products. Gemini Pro is applied to Bard and Gemini Nano is applied to Pixel 8 Pro. Starting December 13th, developers and enterprise customers will be able to use Gemini Pro through the Gemini API.

I thought the ability to interpret images or videos would be mastered quickly, but I never expected that reasoning skills would grow so quickly. It was surprising to see a video that made inferences that would require a person to accumulate over a long period of time to interpret.

I haven't seen it actually applied to the service yet, so I'll have to wait and see, but the performance of going beyond interpreting the superficially visible form and drawing out the hidden meaning within it has improved tremendously. Now, if we go beyond verbal responses like humans to respond visually, I think there will be a big revolution in design.